Section Three - Blackbox

A story on Tinder, a gaydar and a robot solving Rubik's cube (6 minutes)

In this section we take a closer look at how machine learning works, and what it means for transparency.

AI is everywhere

As we have already stated, AI is in a lot of things.

All around us are already forms of (weak) artificial intelligence (plain old algorithms, machine learning, deep learning, neural networks) that are active in everyday applications such as Google (and Google Maps), the newsfeed of Facebook, the trending topics on Twitter, the recommendations on YouTube and Netflix, the surveillance cameras at Schiphol, and so on.

AI assists us, but it also changes us and shapes us as we have seen in crash course one.

Example: Tinder.

Tinder used – among other things – to work with an AI technique revolving around the Elo score. Elo score is a term that originates from the chess world. A little bit nerdy, but Tinder was probably not invented by people who did well in the pub. The Elo score in Tinder worked as follows: if you swiped someone with a higher score, and you were swiped back, your score would go up and vice versa. This way you were slowly clustered with people with the greatest chance of a match. However, it also meant that if you thought you only saw ugly people on Tinder, then those are the people who found you attractive!

You were an AI certified ugly persons magnet.

Nowadays, Tinder say they no longer use the Elo score, but they are fuzzy about how the algorithm exactly works. Maybe facial recognition is in play, maybe something else. Either way, you can be sure that when using Tinder, algorithms are influencing your choices and that you have no idea what those algorithms are doing.

Deep learning and neural networks

Just as machine learning is a subfield of AI, deep learning is a subfield of machine learning and neural networks make up the backbone of deep learning algorithms. Sounds complicated and it probably is complicated. For this crash course the important thing to remember is that deep learning and neural networks have something to do with more complex methods of machine learning.

Let's look at an explanation video (two minutes) - again with some dogs in it.

Built like our brains?

Neural networks are called neural networks because they function more or less like our brains. There are synapses and neurons. There is input, there is feedback and there is getting better at something. However, thank God, I did not have to show my daughter millions of pictures of dogs, before she finally could recognize one.

So maybe, our brains work very differently.

The Black Box

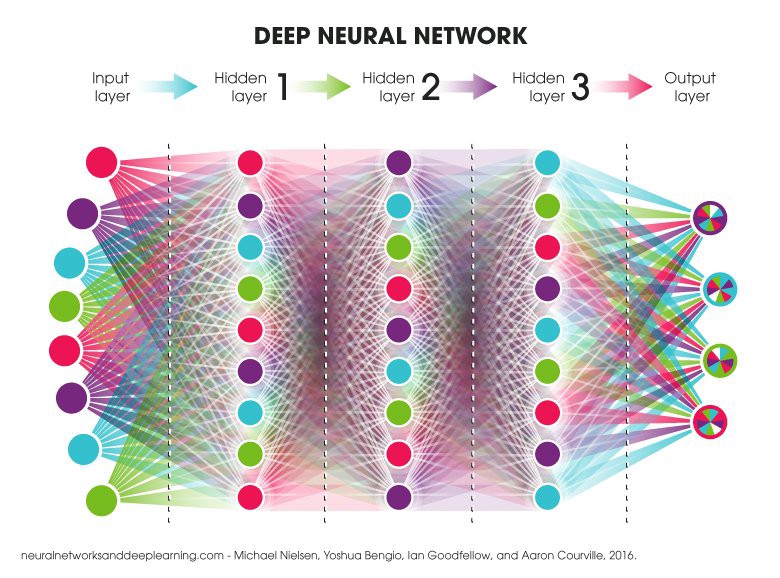

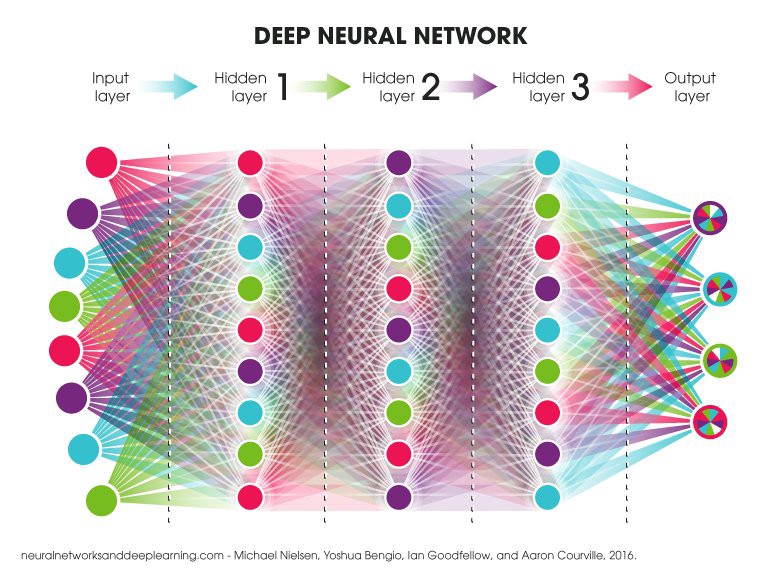

Neural networks - represented in a simple way - look like the picture below.

(picture from Towards Data Science)

(picture from Towards Data Science)

A neural networks consists of three layers:

- An input layer;

- A hidden layer;

- An output layer.

As we have already seen, machine learning needs a lot of input, so the software can start to 'learn.' Computers are getting faster and faster, so neural networks 'learn' faster and faster. Let's look at a fun example of a fast computer (30 seconds).

The input is labelled. We have to tell the software if a picture is a dog or not a dog. This is important. If I had shown my daughter pictures of dogs from an early age and kept saying it was a cat, she would probably have mistaken all dogs for cats. The way we label the input is therefore always subjective (like we saw in crash course four).

The objective of AI is always subjective.

Furthermore, it is important to remember that most of the time the input is data that was created in the past. Therefore the input is often biased. Simply put, if you want to create a new future, can you do that with a system trained on data from the past? There are lot of examples on biased AI, which we will discuss in crash course six (inclusivity).

There are, of course, also many wonderful applications of neural networks, in which prejudices do not apply or are less valid. For example skin cancer detection.

Gaydar

The above was quite interesting, don’t you agree?

However, artificial neurological networks really get interesting when we focus on the hidden layers. It is very intriguing, that these layers are always actually called “hidden layers." Not descriptive layers or definition layers, no: hidden.

An artificial neurological network is not secretive about the fact that something mysterious is going on.

A good way to explain the importance of the hidden layer is by the example of the gaydar. The gaydar (gay radar) is an urban myth. It is the idea that gay men are better in recognizing other gay men than heterosexual men. However, if you test this by showing photos, the existence of a gaydar often turns out to be a myth.

But a data scientist, Michael Kosinski, from Stanford trained an AI to recognize sexual preferences. Here is an explanation and the results in a one minute video:

Michael Kosinski using “cheap” AI, went a long way in recognizing gay men and wrote a warning paper about it, which caused a lot of unrest. His conclusions have been under scrutiny. He used pictures from dating sites, so these were biased, because gay people wanted to look attractive for other gay people. Still, a system like the AI gaydar is really creepy if you think about countries that still have the death penalty for homosexuality.

However, the most disturbing thing about systems like the AI gaydar is that it is almost impossible to explain exactly HOW the system functions. The outcome is measurable, so it can be concluded THAT the system functions, but if you ‘open’ the black box, there is a spaghetti of millions of weighted connections that are almost impossible to unravel.

Remember the picture above? Now imagine that there are thousands of hidden layers and a system that creates layers itself. That is one colourful black box.

Suppose we were to ask Michael Kosinski to create an AI gaydar that is able to explain itself. For example: if the person has a moustache then his gay factor increases by (x), etc… The result would be that the system would become more unreliable.

So there is a trade-off between accuracy and transparency.

Quick question: Can you imagine a future where a doctor recommends treatment and if you ask: why doctor? Then the answer is, I don't know, and if I did know the answer, my advice would probably be worse! And do you want to live in a future like that?

Or do you think it is better if AI can explain itself? That is the topic of the next section.