Section Three - Racial bias in technology

A story about a racist soap dispenser, camera and racist AI (8 minutes)

Is technology racist?

That is a good question. Technology in itself is, of course, not racist. It concerns the people who design, program or use the technology. Are they racist? In many cases, yes.

As a result, technology can have negative consequences for people of a certain race. This can be the result of ignorance, conscious design choices, mistakes, or carelessness.

Let's discuss some examples to paint a picture.

Note: The format of the examples below is arbitrary. We are not always sure whether the consequences of the technology have been consciously or unconsciously designed that way. But the intention is to give you an impression of the problem.

Mistakes

Below are some examples that were designed without taking race into consideration.

We start with, an automatic soap dispenser that does not recognise black skin (video 1 minute).

Testing with a person with a dark skin was obviously not taken into consideration by the design team.

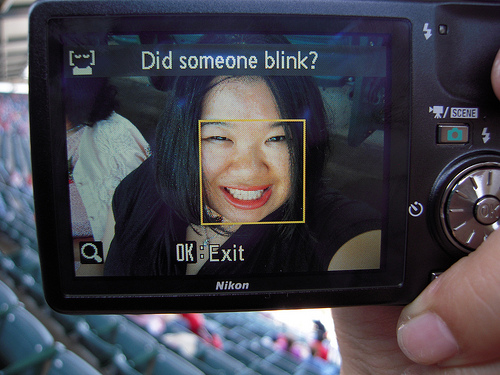

Next, the Nikon Coolpix S630, a camera that when making pictures of Asian faces often asked: "did someone blink?". Same type of problem.

(Picture form Peta Pixels, 2010)

(Picture form Peta Pixels, 2010)

Examples above are a bit older. The cameraproblem is ten years old, but the problem still exist. However, today it is harder to hide behind mistakes. For example facial recognition software is increasingly being used in law enforcement – and is another potential source of both race and gender bias. In February of 2020, Joy Buolamwini at the Massachusetts Institute of Technology found that three of the latest gender-recognition AIs, from IBM Microsoft and the Chinese company Megvii, could correctly identify a person’s gender from a photograph 99 percent of the time – but only for white men. For dark-skinned women, accuracy dropped to just 35 percent. That increases the risk of false identification of women and minorities.

The reason is the data on which the algorithms are trained: it contains way more white people than coloured people and often way more men than women, so it will be better at identifying white men. IBM quickly announced that it had retrained its system on a new dataset, and Microsoft said it has taken steps to improve accuracy. Algorithms that are not able to correctly identify people from a different race can be especially troubling when you think about for example self-driving cars making split decisions.

A real world example. A company recruits personnel via video. The software looks at expressions and emotions and provides advice to the personnel officer. However, people of colour are always given a negative rating because the software has difficulty recognizing emotions with dark skin tones. Emotions are not recognized, so the coloured applicants are categorized as uninterested or unenthusiastic.

Another recent example: The software of a system that monitors online tests uses a method where they look at the face of the student. However, this does not work well with coloured students, who therefore have extra stress and sometimes have to take the test with a large lamp on their face. As if they are being questioned by the police in an old movie.

(Twitter Pic, 2020, @ArvidKhan)

(Twitter Pic, 2020, @ArvidKhan)

In general, it can be said that what could happen accidentally in 2010 is now a lot more problematic. Fortunately, although things still often go wrong, we see that technology designers and programmers are increasingly aware of the impact of their technology on people of different races. At times, however, the technology still seems deliberately racist.

Racist algorithms

In crash course four and five and in the previous section we have seen the importance of the data with which a certain advanced algorithm is 'trained.' If this data is racist, than chances are that the output of the algorithm will be racist. Let's look at some prominent examples.

COMPAS is an algorithm widely used in the US to guide sentencing by predicting the likelihood of a criminal reoffending. In perhaps the most notorious case of AI prejudice, in May 2016 the US news organisation ProPublica reported that COMPAS is racially biased. According to the analysis, the system predicts that black defendants pose a higher risk of recidivism than they do, and the reverse for white defendants. Equivant, the company that developed the software, disputes that. It is hard to discern the truth, or where any bias might come from, because the algorithm is proprietary and so not open to scrutiny. But in any case, if a study published in January of 2020 is anything to go by, when it comes to accurately predicting who is likely to reoffend, it is no better than random, untrained people on the internet.

We have already talked about the phenomenon that advanced AI lacks transparency (it is a black box) this problem is getting bigger if the AI is also proprietary, like in the example of COMPAS.

And then we have PREDPOL. Already in use in several US states, PredPol is an algorithm designed to predict when and where crimes will take place, with the aim of helping to reduce human bias in policing. But in 2016, the Human Rights Data Analysis Group found that the software could lead police to unfairly target certain neighbourhoods. When researchers applied a simulation of PredPol’s algorithm to drug offences in Oakland, California, it repeatedly sent officers to neighbourhoods with a high proportion of people from racial minorities, regardless of the true crime rate in those areas.

In response, PredPol’s CEO pointed out that drug crime data does not meet the company’s objectivity threshold, and so in the real world the software is not used to predict drug crime in order to avoid bias. Even so, last year Suresh Venkatasubramanian of the University of Utah and his colleagues demonstrated that because the software learns from reports recorded by the police rather than actual crime rates, PredPol creates a “feedback loop” that can exacerbate racial biases.

Both examples above show that algorithms that are trained with racist data in a black box can lead to racist output, which in turn can lead to a self-fulfilling prophecy.

Biased systems produce biased algorithms.

Racist algorithms - by mistake

COMPAS & PREDPOL knew, or could have known, that their input was racist. Often, however, algorithms produce racist results that would have been more difficult for the designers of the technology to predict.

Let's take a look at some examples.

Google images identified black people as gorillas after an image search. Or what about this 30 second video about Google’s idea about three black/white teenagers:

Google works hard at correcting results like the one in the video above, but it is a perfect example of a biased system (Google algorithms and its users) producing biased results.

A freaky example. There were far less PokéStops in minority neighbourhoods. That was strange, because at the same time holocaust memorial places were complaining all the time about Pokémons popping up.

Another example. In October 2017, police in Israel arrested a Palestinian worker who had posted a picture of himself on Facebook posing by a bulldozer with the caption “attack them” in Hebrew. Only he hadn’t: the Arabic for “good morning” and “attack them” are very similar, and Facebook’s automatic translation software chose the wrong one. The man was questioned for several hours before someone spotted the mistake. Facebook was quick to apologise.

#Sorry.

In 2020, Twitter users discovered that the platform automatically cropped photos, preferring white faces. The reason was probably because the algorithm had been trained by having people looking at photos and using cameras to determine what was the most interesting part of the photo. But when white people, look at white people in photos, things go wrong.

Coded racism

In crash course seven (stakeholders & platforms) we talk about the fact that platforms are opinions or worldviews translated into code. This can also be a racist worldview.

For example, if AirBnB shows the ethnicity of someone that wants to rent a room, then it could become harder for certain people to rent a room. There is a lot of research that shows that it is harder for an coloured person to rent a (popular) AirBnB. There even is a NoirBnB. This does not automatically mean that AirBnB is intentionally racist, but the way it is designed can have these results. Does an Uber driver see the ethnicity of their next customer? On kickstarter.com, do the best ideas get the most money? Or do the white men with ideas get the most money? On GoFundMe, does the best charity get the most money? Or does the money go to the little white girls with the suburban parents?

These choices in technology by platforms that are used by millions or billions of people cannot be taken lightly.

There are way more examples of 'racist technology.' From an Apple Watch having problems registering heartbeat through black skin, to medications that have mainly been tested on white people, to dating apps. We will provide you with a lot of examples in the additional materials. These underline the importance of being aware of racist bias when assessing, designing, programming, implementing or using a certain technology.

To prevent digital redlining.

Digital Redlining

In the United States there is a term from the 1960’s called redlining. This term refers to the systematic denial of various services by federal government agencies, local governments as well as the private sector, to residents of specific, most notably black, neighbourhoods or communities. Nowadays, there is also something called digital redlining.

Digital redlining is the practice of creating and perpetuating inequities between already marginalized groups specifically through the use of digital technologies, digital content, and the internet.

Take aways from section three:

- Technology can be racist;

- This can be by design (because it was the product of a racist system/trained on racist data);

- This can be by 'mistake' (because there was insufficient awareness of the possibility that it could be racist);

- Being aware of the potential impact of technology on certain parts of the population is therefore very important.