Section Five - Quantified Self

A story about arrogant scales (10 minutes)

Jump Around

We talked a lot in sections one to four about the negative impact of (new digital) technology on our autonomy and happiness. It was not a happy story at all. It was a bit like a house of pain: we jumped around topics considering our apps, smartphones, data and social media and it was mostly bad for our human values.

The most important thing for you to remember is that modern, digital technology can have a negative impact on our autonomy & happiness. Digital technology can limit our ability to make choices. It can make us anxious, jealous, insecure. This is especially true with modern, dominant new technologies (apps, social media, smartphones) where the values of the technology companies are not our core values.

It helps to understand the underlying mechanisms like we talked about in sections two to four.

We have consciously made the choice for looking at autonomy & happiness in connnection with apps, social media and smartphones. This was just a choice. because we only have one hour and we wanted to inspire you. However modern, digital technology can influence autonomy and happiness in many different ways.

For example a Cry Analyzer. This is an app that analyzes the crying of your baby. The app can determine if the baby is hungry, in pain, or just wants attention. Is this good for your autonomy? Do you want an algorithm assisting you? Or do you trust your instincts? Does it bring piece of mind? Does it make you happy? We analyzed all these questions in one of our example cases on the technology impact cycle tool. So you can find out yourself. We could also do the same with technologies like connected doorbells, smart vacuum cleaners and so on.

In this section we look at a group of technologies that are designed to strengthen our autonomy and increase our happiness. We call this group the quantified self movement.

Quantified self

Quantified self was coined by Gary Wolf and Kevin Kelly. Listen to one of the first explanations of quantified self by Gary Wolf (5 minutes)

Quick question: what do you think? Do you think these technologies (and mind you, this presentation is TEN years old) are going to help you. Or not? Would you use them? Why? Why not?

The classic pyramid

There are virtually no limits to what can be measured. Sensors are getting cheaper, popping up everywhere and getting better. More and more data is being produced. Wearables & trackers are getting more and more popular.

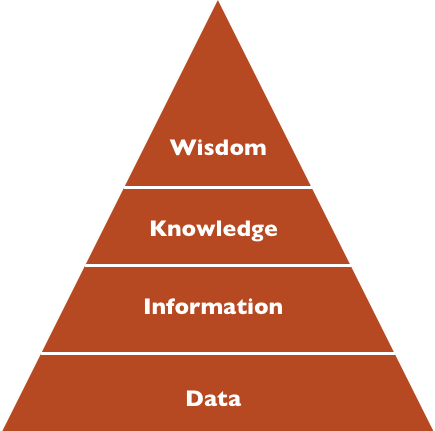

The real challenge, of course, is to convert that data into information and that information into knowledge and that knowledge back into wisdom. According to the well-known pyramid.

However, maybe this classic pyramid is no longer relevant. After all, how can you convert so much data, into information? You cannot. Only algorithms (computer programs) can do that. But can we trust an algorithm?

For example, if you are wearing a sleep tracker it is very hard to look at the data yourself. Can you crunch the data and find out if you have slept well? Probably not, so you have to trust the computer program that makes a summary or a dashboard for you and maybe gives you some advice. But is the advice correct? How do you know?

In the future, technology will continue to improve and disappear into the background. Standards will be created and a new profession may arise: The Personal Data Coach. Someone (or an algorithm in the long term) that looks at all your data and provides you with advice. The underlying idea of the quantified self is, of course, not only that you gain insight into yourself, but also that you do something with it. That you improve yourself. Upgrade yourself.

More autonomy (because of the insights) and more happiness (because of the improvements).

Bio-hackers are a window into the future. Bio-hackers measure, change things and investigate the impact of the things they changed. This ranges from influencing their diet to stimulating their brain waves, to modifying their DNA or implanting technology.

Quick questions: Do you think this all sounds good? When you measure things about yourself, you can make better decisions and you can become happier? Agreed? Right? Or not?

Some potential negative effects.

It is always good to be aware of potential negative effects. This can help you assess, judge, design, program and improve technologies.Even well-intended technologies like the quantified self solutions can have negative effects.

We listed some below.

First, privacy!

This is discussed in length in crash course number three, but it is especially important in the quantified self movement. There are a lot of apps, wearables and tools that measure you but don’t take privacy too much into account. For example: the FitBit. This is a wristband that keeps track of how much you sleep, how much you move and what your heart rate is. That information is completely private, because it is about you. However, you can only activate the FitBit if you first allow your data to be shared with the (American) company. The company does that in accordance with the FitBit Terms and Agreements but there are many concerns and loopholes. Certainly as a consumer, it is not easy to find out exactly what happens to the data of a device that measures your personal data.

Think about that for a moment. Who actually measures who? While you think you are measuring yourself, you are simultaneously being measured by someone else. While you measure your sleep behavior and experiment with improvements, someone else measures you too. While you watch Netflix, Netflix watches you. While you listen to Spotify, Spotify listens to you. Just like the white mice in The Hithchhiker's Guide through the Galaxy that conduct experiments on humans.

If we take autonomy serious, we should therefore work on apps, tools and wearables where we ourselves are 100% owner of our data. Even it means paying a little more. Even if it means paying for your privacy.

Second, data ownership

A second concern is that our bosses and our teachers also want access to our data. The Dutch Data Protection Authority recently banned the processing of employee health data by employers, but there is much more data. A lot of employers track the time you work (for example with a time clock). Why wouldn’t the employer keep track of how much you email, surf pointlessly, work from home, meet and so on?

The same applies to students. Nowadays, it is increasingly recorded how a student behaves in the e-learning environment (login behaviour, what have you viewed, what have you submitted and when?). We call this Learning Analytics. But you can also keep track of how much time someone spends on campus. And what he does there. And how many times students are checking their phones during classes.

The problem, of course, is that it is your data. The quantified self movement is about you gaining insight and improving. Not about someone else gaining insight. Not even if this someone else has the best of intentions.

Third, solidarity

What about solidarity? If the rise of the quantified self leads to the quantified you, this offers the opportunity to connect conclusions to that data. For example, if you share your data with your health insurer (and you live healthily), you will receive a discount. Sounds good, or not? Many people in the Netherlands are open to that. The same principle applies to your driving behaviour. It sounds fair. Why do you, a perfectly healthy young man, have to pay as much as someone who neglects their health?

But there are three problems with this type of insurance based on (big) data from the quantified self.

Problem One: The infinite complexity of reality.

Health or safe driving behaviour is much more complex than is presented in the data. This means that the data collected is not only a bad indicator, but also leads to the insatiable need for more data. Should the data not providing the correct results then we need more data! This is, of course, problematic with privacy as mentioned before, and it does not matter how much data you collect it will still lead to simplification.

Quick question: The data says that married men live healthier lives. If I get a divorce, should I pay more for my health insurance?

Answer: Not if I am divorcing a wife that gives me stress, makes burgers every day, smokes and makes me drink, but that is not in the data.

Problem Two: The average becomes the norm.

If you measure something then there will be an average. And if there is an average there will be a wrong and a right. That is a big problem. Because this way collecting data and the quantified self leads to mediocrity. Instead of taking advantage of the uniqueness of the quantified self (it’s about you, the unique you!) The opposite happens. We are increasingly moving to the average.

Problem Three: Solidarity is under pressure.

The insurer’s system is based on the healthy person paying for the less healthy person. How will that system continue to work if the premium is linked to quantified self Data? And what does that mean for people who do not show risk-averse behaviour? For innovators, adventurers, entrepreneurs who work 18 hours a day, etc …

Will being boring be rewarded?

At the same time, the quantified self movement can also be a driver of solidarity. This movement is also known as the quantified us. The idea is that groups of people share their quantified self data and (automatically) learn from each other. Suppose you have diabetes, or epilepsy or sleep poorly and you share your data with others. That enables you to find (together) solutions for your problem. The intriguing thing is, of course, that on the one hand there are enormous concerns about the security and privacy of the digital patient file and on the other hand platforms are popping up everywhere on which patients seem to carelessly share their data.

Take aways from section five

- Technology can help you make better decisions and improve.

- Technology can give you more autonomy and make you happier;

- Quantified self is an example, however, even with well-intended technology there are a lot of pitfalls to be aware of.

Some final words on crash course two

Congratulations! You have completed this crash course, so you got a very small taste of thinking about technology and the impact of technology on you and on society. This was just an appetizer. If you are going to assess, design, program, discuss, use or invent technology we would like you to remember that:

- Technology has impact on human values;

- Two important human values are autonomy (the ability to make (good) choices) & happiness;

- These values are threatened by modern, digital technology implementations & corresponding business models;

- The attention merchant wants to grab your attention;

- The surveillance capitalist wants to automate you;

- The app-designer wants to make you addicted;

- They all try to influence your ability to make choices and play on your anxieties and insecurities;

- These are threats, but technology can also strengthen your values (like with the quantified self, but even then you have to be aware of pitfalls).

Want more?

This is a crash course. It only took one hour to complete. If you want more, we have a lot of options.

- First, you can check out section six, with all kinds of additional materials. Section six is updated regularly;

- Second, you can do one of the other ten crash courses;

- Third, you can look at the case examples in the Technology Impact Cycle Toolkit (especially the category human values). For example The Griefbot case;

- Fourth, you can start using the Technology Impact Cycle Tool yourself especially the questions on human values;

Finally, do you have any suggestions or remarks on this course? Let us know at info@tict.io.